Azure Log Analytics ML: Using the evaluate operator with the app() or workspace() scope function

I just came back home from the MVP Summit in Redmond, USA, and I was welcomed by a snow-covered Gothenburg and a close friend that cheerfully told me he had purchased a house. As much as I love the MVP Summit, and always have so much fun, it’s always nice to come home to your loved ones. I’ll write a separate post on the summit, and I’ll make sure to post the many pictures I took as well. But for now, I’d like to share a solution to peculiar problem that I came across while in Bellevue this week.

We use Azure Log Analytics at work, and we push our log entries to Azure and other metrics by using Application Insight. Azure Log Analytics uses its own clever query language that offers an array of functions, operators and plugins- some of them even allowing for in-line machine learning (ML). Although I’m not terrible good at machine learning I still like to play around with ML and wanted to see if I could use it with our data.

As I’ve mentioned before in the blog, we have an Application Insight resource for each service that we have, and all our server are connected to a workspace for that particular environment (production, QA, Dev etc). This means that we often do queries across resources- to compare and contrast and to pull out diagrams that show the health of the services and VMs. You can do queries across resources by using the app(“resourceName”) or workspace(“resourceName”) scope function together with the union operator:

<span style="color: #3366ff;">union app("one").requests, app("two").requests | where "blap" | summarize "blip"</span>However, when I tried using the evaluate operator with machine learning plugins such as autocluster() or diffpatterns() I would get an error:

One or more pattern references were not declared. Detected pattern references

The use of app() or workspace() scope function seemed to be the problem- not doing a union to query across several resources.

This didn’t work:

<span style="color: #3366ff;">workspace("vmPROD").Perf</span>

<span style="color: #3366ff;"> | evaluate autocluster()</span>Neither did this:

<span style="color: #3366ff;">app("someService").traces</span>

<span style="color: #3366ff;"> | evaluate autocluster()</span>This did:

<span style="color: #3366ff;">Perf</span>

<span style="color: #3366ff;"> | evaluate autocluster()</span>The problem is that I wanted to evaluate across resources. At first I thought it might be a scope function limitation, but table() - also a scope function, worked.

This worked:

<span style="color: #3366ff;">table("Perf")</span>

<span style="color: #3366ff;"> | evaluate autocluster()</span>I posted the question to StackOverflow, and sent an email to the Azure Application Insights PM, but got no reply so I kept looking.

In the end I decided to try the materialize() function This function allows you to cache the result of a subquery, and it seems like I can use the machine learning functions against the cached result when using app() or workspace() to reference the resource or resources. This also works when doing joins- which is what I wanted to do across resources. There are two main limitations to think about, you can at most cache a 5GB result, and you have to use the let operator.

Here is a working example with a join:

<span style="color: #3366ff;">let joinResult = union app('Konstrukt.SL.CalculationEngine').requests, app('Konstrukt.SL.AggregationEngine').requests;

let cachedJoinResult = materialize(joinResult);

cachedJoinResult

| where success == false

| project session_Id, user_Id, appName,operation_Id,itemCount

| evaluate autocluster()</span>The query looks at all the failed requests for two services and then creates clusters based on the session id, user id, app name operation id and item count.

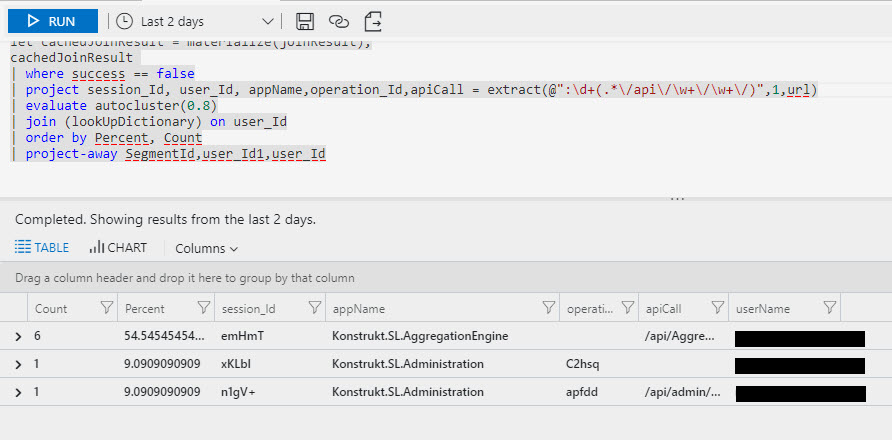

Here is a different version of the query above where I’m using a lookup table to get the actual usernames. Based on the last two days it looks like there is a recurrent theme with the Aggregation engine, a particular user and session, and API call.

Neat stuff!

Comments

Last modified on 2018-03-10